Architecture

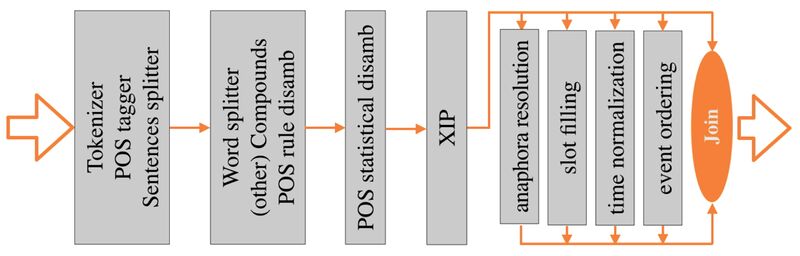

STRING is a Statistical and Rule-Based Natural Language Processing Chain for Portuguese developed at HLT and it consists of several modules, which are represented in the next figure:

Tokenizer

The first module is responsible for text segmentation, and it divides the text into tokens. Besides this, the module is also responsible for the identification at the earliest possible stage of certain special types of tokens, namely: email addresses, ordinal numbers (e.g. 3º (3rd_masc.sg), 42ª(42th_fem.sg)), numbers with dot . and/or coma , (e.g. 12.345,67), IP and HTTP addresses, integers (e.g. 12345), several abbreviations with dot . (e.g. a.C. (before Christ), V.Exa. (Your Excellency)), numbers written in full (e.g. duzentos e trinta e cinco (two hundred and thirty-five)), sequences of interrogation and exclamation marks, as well as ellipsis (e.g. ???, !!!, ?!?!, ...), punctuation marks (e.g. !, ?, ., ,, :, ;, (, ), [, ], -), symbols (e.g. «, », #, $, %, &, +, *, <, >, =, @), and Roman numerals (e.g. LI, MMM, XIV). Naturally, besides these special textual elements, the tokenizer identifies ordinary simple words, such as alface (lettuce). It also tokenizes as a single element sequences of words connected by hyphen, most of them compound words, like fim-de-semana (weekend).

POS tagger

The LexMan POS tagger does the morphosyntatic labeling using finite state transducers. It operates on the output of the segmentation module. As this stage, tokens are tagged by LexMan, with POS (part of speech) labels, such as noun, verb, adjective, or adverb, among others.

There are twelve categories:

- noun,

- verb,

- adjective,

- pronoun,

- article,

- adverb,

- preposition,

- conjunction,

- numeral,

- interjection,

- ponctuation, and

- symbol

The information is encoded in eleven fields:

- category (CAT),

- subcategory (SCT),

- mood (MOD),

- tense (TEN),

- person (PER),

- number (NUM),

- gender (GEN),

- degree (DEG),

- case (CAS),

- syntactic (SYN), and

- semantic (SEM),

No category uses all ten fields. For the most part, categories correspond to traditional grammar POS. Explicit criteria have been defined for problematic POS classification cases (noun-adjective distinction, pronoun, adverb, preposition, conjunction, and interjection).

Sentence Splitter

The final step of the pre-processing stage is the text division into sentences. In order to build a sentence, the system matches sequences that end either with ., ! or ?. There are, however, some exceptions to this rule:

- All registered abbreviations (e.g. Dr.)

- Sequences of capitalized letters and dots (e.g. N.A.S.A.)

- If any of the following symbols or any lower case letter is found after an ellipsis (...): », ), ], }.

Disambiguation

The next stage of the processing chain is the disambiguation process, which is comprised of two steps:

- Rule-driven morphosyntactic disambiguation, performed by RuDriCo2;

- Statistical disambiguation, performed by MARv4.

RuDriCo2's main goal is to provide for an adjustment of the results produced by a morphological analyzer to the specific needs of each parser. In order to achieve this, it modifies the segmentation that is done by the former module. For example, it might contract expressions provided by the morphological analyzer, such as ex- and aluno, into one segment: ex-aluno (former student); or it can perform the opposite operation and thus expand expressions such as nas into two segments, em and as (in + as/Art.def.fem.pl). This will depend on what the parser might need.

Altering the segmentation is also useful for performing tasks such as recognition of numbers and dates. The ability to modify the segmentation is achieved through declarative rules, which are based on the concept of pattern matching. RuDriCo2 can also be used to solve (or introduce) morphosyntactic ambiguities. By the time RuDriCo2 is executed along the processing chain, it performs all of the above mentioned tasks.

MARv4's main goal is to analyze the labels that were attributed to each token in the previous step of the processing chain, and then choose the most likely label for each one. In order to achieve this, it employs the statistical model known as Hidden Markov Model (HMM). In order to properly define a HMM, first one needs to introduce the Markov chain, sometimes called the observed Markov model. A Markov chain is a special case of a weighted automaton in which the input sequence uniquely determines which states the automaton will go through. Because it cannot represent inherently ambiguous problems, a Markov chain is only useful for assigning probabilities to unambiguous sequences, that is, when we need to compute a probability for a sequence of events that can be observed in the world. However, in many cases events may not be directly observable.

In this case in particular, POS tags are not observable: what we see are words, or tokens, and we need to infer the correct tags from the word sequence. So we say that the tags are hidden — because they are not observed. Hence, a HMM allows us to talk about both observed events (like words that we see in the input) and hidden events (like POS tags).

There are many algorithms to compute the likelihood of a particular observation sequence. MARv uses the Viterbi algorithm.

Syntactic analysis

XIP (Xerox Incremental Parser) is the module responsible for performing the syntactic analysis. This analyzer also allows for the introduction of lexical, syntactic, and semantic information to the output of the previous modules. XIP then performs the syntactic analysis of the text through the sequential application of local grammars, morphosyntactic disambiguation rules, and, finally, the calculation of chunks and dependencies. XIP itself is composed by different modules:

- Lexicons — XIP lexicons allow to add information to the different tokens. In XIP, there is a pre-existing lexicon, which can be enriched by adding lexical entries or changing the existing ones.

- Local Grammars — XIP enables the writing of rules for pattern-matching, considering both the left and right contexts of a given pattern. These rules are intended to define entities formed by more than one lexical unit, and then grouping these elements together into a single entity.

- Chunking Module — XIP Chunking rules perform a (so-called) shallow parsing or basic syntactic analysis of the text. For each phrase type (e.g. NP, PP, VP, etc.) a sequences of categories is grouped into elementary syntactic structures (chunks).

- Dependency Module — In XIP, dependencies are syntactic relationships between different chunks, chunk heads, or elements inside chunks. Dependencies allow a deeper and richer knowledge about the text's information and content. Major dependencies correspond to the so-called deep parsing syntactic functions, such as SUBJECT, DIRECT OBJECT, etc. Other dependencies are just auxiliary relations, used to calculate the deeper syntactic dependencies. For example, the CLINK dependency links each argument of a coordination to the coordinative conjunction they depend on.

The nodes' sequence previously identified by chunking rules are used by the dependency rules to calculate the relationships between them.

Post-syntactic analysis

For the moment, the following post-syntactic modules have been developed:

- Anaphora resolution,

- Slot filling,

- Time expressions normalization, and

- Event ordering.

Communication

Between the different modules of the processing chain, STRING uses XML (eXtensible Markup Language).

Demo

STRING and its individual modules can be tested here.